Author: Carlos Varela

Associate Professor of Computer Science at Rensselaer Polytechnic Institute

This post appeared originally in The Conversation.

The apparent connection between fatal airplane crashes in Indonesia and Ethiopia centers around the failure of a single sensor. I know what that’s like: A few years ago, while I was flying a Cessna 182-RG from Albany, New York, to Fort Meade, Maryland, my airspeed indicator showed that I was flying at a speed so slow that my plane was at risk of no longer generating enough lift to stay in the air.

Had I trusted my airspeed sensor, I would have pushed the plane’s nose down in an attempt to regain speed, and possibly put too much strain on the aircraft’s frame, or gotten dangerously close to the ground. But even small aircraft are packed with sensors: While worried about my airspeed, I noticed that my plane was staying at the same altitude, the engine was generating the same amount of power, the wings were meeting the air at a constant angle and I was still moving over the ground at the same speed I had been before the airspeed allegedly dropped.

So instead of overstressing and potentially crashing my plane, I was able to fix the problematic sensor and continue my flight without further incident. As a result, I started investigating how computers can use data from different aircraft sensors to help pilots understand whether there’s a real emergency happening, or something much less severe.

Boeing’s response to its crashes has included designing a software update that will rely on two sensors instead of one. That may not be enough.

Cross-checking sensor data

As a plane defies gravity, aerodynamic principles expressed as mathematical formulas govern its flight. Most of an aircraft’s sensors are intended to monitor elements of those formulas, to reassure pilots that everything is as it should be – or to alert them that something has gone wrong.

My team developed a computer system that looks at information from many sensors, comparing their readings to each other and to the relevant mathematical formulas. This system can detect inconsistent data, indicate which sensors most likely failed and, in certain circumstances, use other data to estimate the correct values that these sensors should be delivering.

For instance, my Cessna encountered problems when the primary airspeed sensor, called a “pitot tube,” froze in cold air. Other sensors on board gather related information: GPS receivers measure how quickly the aircraft is covering ground. Wind speed data is available from computer models that forecast weather prior to the flight. Onboard computers can calculate an estimated airspeed by combining GPS data with information on the wind speed and direction.

If the computer’s estimated airspeed agrees with the sensor readings, most likely everything is fine. If they disagree, then something is wrong – but what? It turns out that these calculations disagree in different ways, depending on which one – or more – of the GPS, wind data or airspeed sensors is wrong.

A test with real data

We tested our computer program with real data from the 2009 crash of Air France Flight 447. The post-crash investigation revealed that three different pitot tubes froze up, delivering an erroneous airspeed reading and triggering a chain of events ending in the plane plunging into the Atlantic Ocean, killing 228 passengers and crew.

The flight data showed that when the pitot tubes froze, they suddenly stopped registering airspeed as 480 knots, and instead reported the plane was going through the air at 180 knots – so slow the autopilot turned itself off and alerted the human pilots there was a problem.

But the onboard GPS recorded that the plane was traveling across the ground at 490 knots. And computer models of weather indicated the wind was coming from the rear of the plane at about 10 knots.

When we fed those data to our computer system, it detected that the pitot tubes had failed, and estimated the plane’s real airspeed within five seconds. It also detected when the pitot tubes thawed again, about 40 seconds after they froze, and was able to confirm that their readings were again reliable.

When one sensor fails, other equipment can provide data to detect the failure and even estimate values for the failing sensor.

A different sort of test

We also used our system to identify what happened to Tuninter Flight 1153, which ditched into the Mediterranean Sea in 2005 on its way from Italy to Tunisia, killing 16 of the 39 people aboard.

After the accident, the investigation revealed that maintenance workers had mistakenly installed the wrong fuel quantity indicator on the plane, so it reported 2,700 kg of fuel was in the tanks, when the plane was really carrying only 550 kg. Human pilots didn’t notice the error, and the plane ran out of fuel.

Fuel is heavy, though, and its weight affects the performance of an aircraft. A plane with too little fuel would have handled differently than one with the right amount. To calculate whether the plane was behaving as it should, with the right amount of fuel on board, we used the aerodynamic mathematical relationship between airspeed and lift. When a plane is in level flight, lift equals weight. Everything else being the same, a heavier plane should have been going slower than the Tuninter plane was.

Our program models only cruise phases of flight, in which the plane is in steady, level flight – not accelerating or changing altitude. But it would have been sufficient to detect that the plane was too light and alert the pilots, who could have turned around or landed elsewhere to refuel. Adding information about other phases of flight could improve the system’s accuracy and responsiveness.

The full range of data about Lion Air 610 and Ethiopian Airlines 302 is not yet available to the public, but early reports suggest there was a problem with one of the angle-of-attack sensors. My research team developed a method to check that device’s accuracy based on the plane’s airspeed.

We used aerodynamics and a flight simulator to measure how variations in the angle of attack – the steepness with which the wings meet the oncoming air – changed the horizontal and vertical speed of a Cessna 172. The data were consistent with the performance of an actual Cessna 172 in flight. Using our model and system, we can distinguish between an actual emergency – a dangerously high angle of attack – and a failing sensor providing erroneous data.

Using math to confirm safe flight

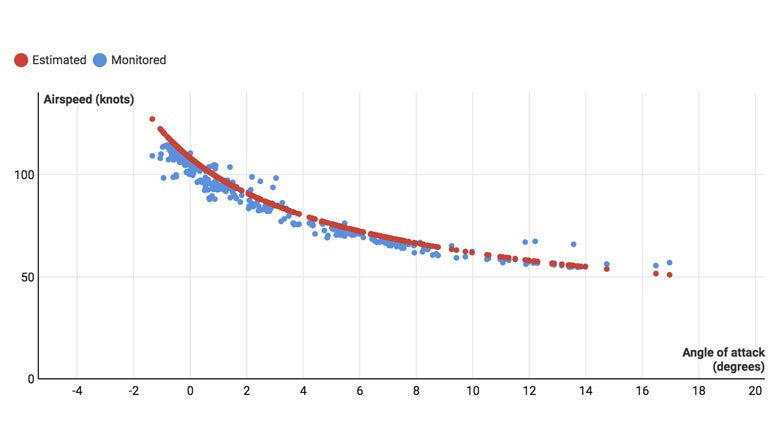

The “Estimated” curve is what should happen in normal flight conditions for a Cessna 172, according to aerodynamic principles. The “Monitored” dots show what actually happened during a test flight. If the sensors had suggested an airspeed-angle of attack combination that was very far from the red curve, a watchdog system could have alerted a pilot that one of the sensors was malfunctioning.

The actual numbers for a Boeing 737 Max 8 would be different, of course, but the principle is still the same, using the mathematical relationship between angle of attack and airspeed to double-check each other, and to identify faulty sensors.

Better still

As my team continues to develop flight data analysis software, we’re also working on supplying it with better data. One potential source could be letting airplanes communicate directly with each other about weather and wind conditions in specific locations at particular altitudes. We are also working on methods to precisely describe safe operating conditions for flight software that relies on sensor data

Sensors do fail, but even when that happens, automated systems can be safer and more efficient than human pilots. As flight becomes more automated and increasingly reliant on sensors, it is imperative that flight systems cross-check data from different sensor types, to safeguard against otherwise potentially fatal sensor faults.