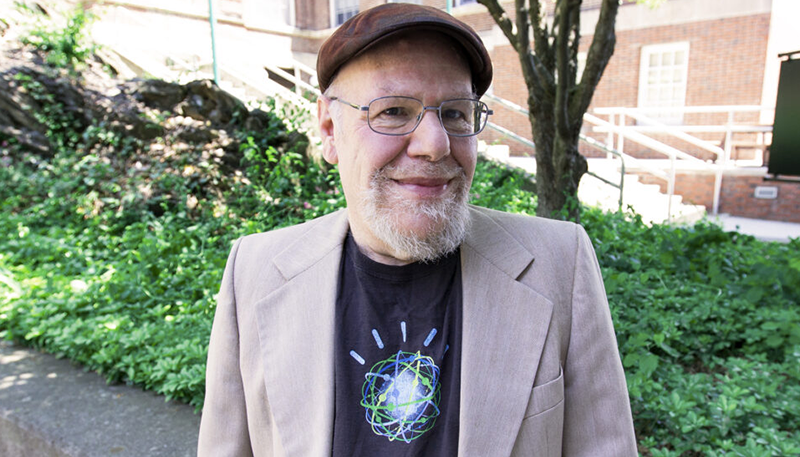

You could barely open a newspaper or turn on the news last year without hearing about another development in artificial intelligence (AI), and with those developments came a lot of bold claims about what it was for and what it could do. At a certain point, it became difficult to separate fact from fiction. Fortunately, RPI has a lot of experts on AI, among them James Hendler, Ph.D., director of the Future of Computing Institute and Tetherless World Constellation Professor of Computer, Web, and Cognitive Science — one of the pioneers of the field. We sat down with him to talk about what AI is capable of and where it might lead us.

What were the major AI milestones of last year?

The biggest milestones were the public releases of AI technologies that had been in development for a few years. OpenAI led the way with DALL-E, an image-generation program, and followed soon after with ChatGPT. Microsoft, Google, Meta, and others had been working on these technologies, but they were hesitant to release them while they still made a fair number of errors. When OpenAI released their programs, the other companies followed suit.

Meanwhile, other kinds of AI have been deployed — in some cases taking advantage of the noise around the language models to avoid publicity. For example, face recognition technology has been used fairly broadly. Some uses have been benign, like on your phone or to help you sort photos. Others have been deployed in ways that could have serious consequences. Police use of face recognition has led to arrests of innocent people. External lighting can lead to false matches, and error rates are higher for minority populations.

What are the biggest risks of AI right now?

The Skynet scenario from The Terminator is not a likely outcome. The possibility that AI is an existential threat, in my mind, is near zero. However, AI is being developed for military use, and while legitimate governments are generally being careful, some technologies are being abused by non-state actors. These systems don’t yet surpass the capabilities of traditional weaponry, or still require humans to deploy. While it would be nice to see international action to limit AI in weaponry, most scientists don’t see this as the biggest risk.

The biggest risks right now come from unregulated uses. It’s becoming easier and cheaper to make deep fakes that spread mis- and disinformation. Regulations are evolving, but fakes are still not illegal in most cases. This can potentially lead to abuse. A video of the Pentagon being bombed was released last year, and before people realized it was fake, there was a drop in the stock market and panic in parts of D.C. The video was totally debunked, but there are still people who believe it happened and it is being covered up, because they saw it. The worst part is that the video didn’t violate any laws. Regulation must catch up with use.

There are other risks, like the environmental impact of energy consumption, but that will be mitigated by necessity (with some regulation).

What are some of the biggest opportunities for AI in the near future, and what are your predictions?

There was a lot of discussion of AI generating scripts and stories for Hollywood, but right now the results are pretty awful. But when a human provides the creativity and the AI helps in the generation, that increases productivity. The main impact is going to be in specialization. General language models make major mistakes, but AIs trained specifically on materials from particular fields are starting to show significant capability. Increased productivity for legal assistants, nurse practitioners, and other professions that assist specialists will allow greater access to services people could not otherwise afford.

My other prediction is easy — regulation of AI will progress. Here in New York, the governor announced a 10-year program to promote AI among a consortium of research institutions (including RPI) and private companies, and that included a set of guidelines for proper and legal use of AI. International bodies like the UN are engaging in discussions like this as well. We’ll also see the growth of public/private partnerships. IBM and Meta have created the AI Alliance (which RPI is also a part of) to look at how companies, universities, and governments can work together to maximize the positive and minimize misuse.

How will AI change our lives in the next five years?

There’s a saying that the best way to predict the future is to make it. I believe we’ll see tremendous growth in AI education in college and high school curricula, as well as specialized programs, such as one we’re launching at RPI, to look at the integration of AI with other emerging technologies.

The term “heterogeneous computing” has emerged to describe how AI computing will be able to interact with existing systems ranging from cell phones to supercomputers. AI to help quantum computing may also be an important aspect of heterogeneity. With respect to quantum and AI, I expect we will see the development of dedicated applications, especially in the biomedical arena.

How it will change our lives is hard to say, but it will, and RPI intends to remain a leader in defining the technology, engineering the systems that will keep it productive, and in providing advice to regulatory and other agencies on the ethical use of the technology.